Archive

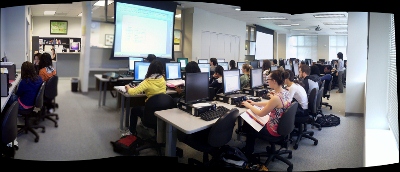

Summer 2012 Learning materials creation clinic for preparing oral assessments/assignments

1. I am holding a “Clinic”, open to anybody who needs help with preparing their classes using oral assessments/assignments in the LRC this fall term – RVSP if interested.

2. This clinic focuses on material creation for delivery in upcoming specific courses – based on, but different from my faculty workshops on this topics, If you have not attended, please view the below links for what was covered in the workshops

a. https://thomasplagwitz.com/2011/08/18/sanako-study-1200-workshop-spring-2011/

Specifically:

1. Materials creation

- with SANAKO

i. make teacher audio recording for model-imitation/question-response oral exam: https://thomasplagwitz.com/2012/01/25/how-a-teacher-best-adds-cues-and-pauses-to-an-mp3-recording-with-audacity-to-create-student-language-exercises/

ii. Make teacher recording (https://thomasplagwitz.com/2012/01/11/recording-with-audacity/) for model imitation with voice insert (like reading practice homework assignment, https://thomasplagwitz.com/2012/01/24/how-a-teacher-creates-audio-recordings-for-use-with-sanako-student-voice-insert-mode/ ):

- with Moodle

i. Moodle Kaltura webcam recording assignment: https://thomasplagwitz.com/2011/11/02/how-to-grade-a-moodle-straming-video-assignment-and-moodle-streaming-video-recording-assignment-glitch-2/

ii. Prepare Moodle metacourses learning materials upload: https://thomasplagwitz.com/2011/06/17/moodle-metacourses-part-iv-the-support-workflow-uploading/ and https://thomasplagwitz.com/2011/01/26/moodle-batch-upload-learning-materials-give-students-access/

- with PowerPoint (visual speaking cues with timers): https://thomasplagwitz.com/2009/11/18/create-a-powerpoint-slide-with-a-timer-from-template-for-a-timed-audio-recording-exercise/

- Materials delivery with SANAKO

- remote control student pcs, collaborate over headphones: https://thomasplagwitz.com/2012/05/04/how-you-can-view-the-computer-screens-of-your-class-using-sanako-study-1200/

- pairing students’ audio using headphones: https://thomasplagwitz.com/2011/05/11/study-1200-pairing/

- You must bring some assessment ideas that fit into your skills course which we will turn into audio recordings. You can also bring prerecorded audio files from textbooks as mp3 which we can edit to turn them into materials. If you would like some examples of what colleagues have done

- With Moodle Kaltura: https://thomasplagwitz.com/feed/?category_name=learning-usage-samples&tag=kaltura

- With Sanako oral (formative) assessments/(outcome) exams: please email me, I make accessible to you samples that we do not publish to preserve exam integrity.

Using NLP tools to automate production and correction of interactive learning materials for blended learning templates in the Language Resource Center. Presentation Calico 2012, Notre Dame University

View screencast  here.

here.

Useful debugging tools for setting up your first DkPro project in Eclipse

My DkPro settings.xml

<?xml version="1.0" encoding="utf-8"?>

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd">

<profiles>

<profile>

<id>ukp-oss-releases</id>

<repositories>

<repository>

<id>ukp-oss-releases</id>

<url>http://zoidberg.ukp.informatik.tu-darmstadt.de/artifactory/public-releases</url>

<releases>

<enabled>true</enabled>

<updatePolicy>never</updatePolicy>

<checksumPolicy>warn</checksumPolicy>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>ukp-oss-releases</id>

<url>http://zoidberg.ukp.informatik.tu-darmstadt.de/artifactory/public-releases</url>

<releases>

<enabled>true</enabled>

<updatePolicy>never</updatePolicy>

<checksumPolicy>warn</checksumPolicy>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories>

</profile>

<profile>

<id>ukp-oss-snapshots</id>

<repositories>

<repository>

<id>ukp-oss-snapshots</id>

<url>http://zoidberg.ukp.informatik.tu-darmstadt.de/artifactory/public-snapshots</url>

<releases>

<enabled>false</enabled>

</releases>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

</repositories>

</profile>

</profiles>

<activeProfiles>

<activeProfile>ukp-oss-releases</activeProfile>

<!-- voriges profile darf nicht auskommentiert werden -->

<!-- Uncomment the following entry if you need SNAPSHOT versions. -->

<activeProfile>ukp-oss-snapshots</activeProfile>

</activeProfiles>

</settings>

trp-learning-materials-starting-pom.xml

<?xml version="1.0" encoding="utf-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>de.tudarmstadt.ukp.experiments.trp</groupId>

<artifactId>de.tudarmstadt.ukp.experiments.trp.learning-materials</artifactId>

<version>0.0.1-SNAPSHOT</version>

<parent>

<artifactId>dkpro-parent-pom</artifactId>

<groupId>de.tudarmstadt.ukp.dkpro.core</groupId>

<version>2</version>

</parent>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.10</version>

<type>jar</type>

<scope>test</scope>

</dependency>

<dependency>

<groupId>de.tudarmstadt.ukp.dkpro.core</groupId>

<!-- not de.tudarmstadt.ukp.dkpro.core.io -->

<artifactId>

de.tudarmstadt.ukp.dkpro.core.io.text-asl

</artifactId>

<!-- <version>1.3.0</version> -->

<type>jar</type>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>de.tudarmstadt.ukp.dkpro.core</groupId>

<artifactId>de.tudarmstadt.ukp.dkpro.core.tokit-asl </artifactId>

<!-- <version>1.4.0-SNAPSHOT</version> -->

<type>jar</type>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>de.tudarmstadt.ukp.dkpro.core</groupId>

<artifactId>de.tudarmstadt.ukp.dkpro.core.opennlp-asl</artifactId>

<type>jar</type>

<scope>compile</scope>

</dependency>

<dependency>

<!--

Add de.tudarmstadt.ukp.dkpro.core.stanfordnlp-gpl to the dependencies -->

<groupId>de.tudarmstadt.ukp.dkpro.core</groupId>

<artifactId>de.tudarmstadt.ukp.dkpro.core.stanfordnlp-gpl</artifactId>

<!-- tba: type pom and scope -->

</dependency>

<dependency>

<groupId>de.tudarmstadt.ukp.dkpro.core</groupId>

<artifactId>

de.tudarmstadt.ukp.dkpro.core.opennlp-model-tagger-en-maxent

</artifactId>

<version>1.5</version>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>de.tudarmstadt.ukp.dkpro.core</groupId>

<artifactId>de.tudarmstadt.ukp.dkpro.core-asl</artifactId>

<version>1.4.0-SNAPSHOT</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!--

Add de.tudarmstadt.ukp.dkpro.core-asl with type pom and scope import to dependency management-->

<dependency>

<!--

Add de.tudarmstadt.ukp.dkpro.core-gpl with type pom and scope import to dependency management-->

<groupId>de.tudarmstadt.ukp.dkpro.core</groupId>

<artifactId>de.tudarmstadt.ukp.dkpro.core-gpl</artifactId>

<version>1.4.0-SNAPSHOT</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

</project>

![image_thumb[8] image_thumb[8]](https://thomasplagwitz.com/wp-content/uploads/2012/06/image_thumb8_thumb.png?w=244&h=50)