Archive

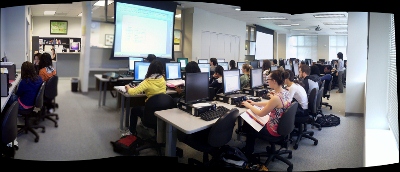

Slowing source audio for interpreting classes in the digital audio lab

- To judge from listening to Simult. Lesson 1, text 2 on Acebo Interpreter’s Edge (ISBN 1880594323), I am wondering whether some of our students (= personalization) would need this audio to be simplified, to gain the benefit of a well-adjusted i+1? I can pre-process the audio :

- Where the flatlines = natural pauses are in above graph, insert a audio signal as where students can press voice insert recording, Example:

- We can also insert a pause and a cue at the beginning and end to set students a limit how long they can interpret, but if students operate the player manually, there is no teacher control and no exam condition, and the students having to manage the technology tends to distract from the language practice.

- Slow down the audio without changing the pitch (just have to make sure not to overdo it, else will sound like drunken speech – my time stretching software would be able to avoid “drunken speech” syndrome, but I have not been able to work on it since briefly for IALLT in Summer 2011 for 3 years now…)

- We can use this adjusted with the Sanako grouping feature to personalize instruction (find the right i+1 for each of your student, useful if there are considerable variations in their proficiency): How to group students into sessions (in 3 different ways) goo.gl/JgXUP/.

- Where the flatlines = natural pauses are in above graph, insert a audio signal as where students can press voice insert recording, Example:

Protected: Elti0162 Syllabus with learning materials for listening and speaking

Watch how to start and activate speech recognition from the desktop

Watch how to configure the speech recognition wizard on Windows 7

Choose the same options in your language (every time you log in, until we find a way to set these options on a per-machine level).

Learn and teach writing in your second language on Lang-8.com

Improving language learning with technology for me seems to have 2 avenues: AI and human intelligence. Automated feedback on writing provided by proofing tools – even if they have become smarter and more contextual to spot (in MS-Word 2007 and up) common errors like your/you’re or their/there – makes one wonder about the feasibility of the former. But that automated essay-scoring tools which have been developed and deployed (at least for ESL) claim to score similarly as teachers makes one wonder about much more… Correcting writing remains expensive!

So may be we should look into crowd-sourced writing correction which needs no cutting edge NLP, only well-understood WWW-infrastructural technology to connect interested parties, but requires social engineering to attract and keep good contributors (and a viable business model to stay afloat: This site seems freemium).

Reading online comments and postings in your native language makes one wonder: can language teachers be replaced by crowdsourcing? I became aware of this the language learning website that offers peer correction of writing input by native-speaker through a language learner corpus. I have not thoroughly evaluated the site, but the fact that its data is being used by SLA researchers here (http://cl.naist.jp/nldata/lang-8/) seems a strong indicator that the work done on the website is of value.

To judge by the numbers accompanying the corpus (it is a snapshot from 2010, a newer version is available however on request), these are the most-represented L2 on lang-8.com: